3 Lessons from Launching Scribe

Scribe is a transcription and summarisation tool that transforms conversations into structured case notes built by our team at Open Government Products.

Every month, we’ll feature lessons learned and behind-the-scenes of building our products for public good. This month, we have Caleb, our software engineer, to share about the team’s journey in building a transcription and summarisation tool for medical social workers.

Last year, we launched Scribe to the Medical Social Workers (MSWs) working in our hospitals in Singapore. Scribe is a transcription and summarisation tool that transforms conversations into structured case notes.

Why did we build Scribe?

As MSWs write extensive case notes (i.e. documentation) for the patients they interact with, having a tool that can generate notes from their interactions is a massive time-saver.

We built Scribe in Jan 2024 during our annual hackathon, where small teams of 5 spend a month working on problem statements of their choosing. When we looked at existing tools in the space, we realised they all had at least 1 of these 3 issues:

Transcription was not fast

Transcription did not support local languages / accents

Transcription could not handle multilingual conversations

With these issues in mind, we made some technical tradeoffs when building Scribe so that it could generate a reasonably good transcript for local multilingual conversations and quickly, but post-session (i.e. no live transcription.) This transcript would then be summarised into a meaningful case note.

Putting it in the hands of users

After a workable MVP in January, we productionised and pilot-tested it in April with 1 hospital. We learned a lot from this and reworked the app significantly before doing full rollout to all the hospitals between Aug - Oct 2024.

Now, Scribe is in the hands of 1000+ MSWs, where it’s used for >2.5K conversations a month and saves >400 hours of documentation time monthly. From our surveys, we found that Scribe was able to reduce time spent documenting a session by >40%.

Looking back, I want to share 3 lessons we learned from launching Scribe.

Users know what they need, but not what they want (so, pilot fast!)

Because my team also works on a patient management system for MSWs, we knew that documentation was a big pain point for them.

When an MSW interviews a patient, they cover topics like the patient’s family background, medical history, financial situation, and so on. This enables them to make a holistic assessment of the patient and to arrange for financial and other forms of assistance accordingly.

MSWs also have to document these interactions; a 1-hour conversation might translate to 30-45 minutes of case note writing. Multiply this by the number of patients they have to see in a day, and you can imagine why documentation feels time-consuming and tedious.

The problem was clear, so in our MVP, we took what we thought was a straightforward approach. Scribe only does two things:

Transcription: It records and transcribes conversations

Summarisation: It generates summaries from these transcripts

Users use their mobile devices to start a Scribe session, which streams audio data to our backend systems. Once concluded, we generate a transcript from the audio using the open-source whisper-large-v3.

On their computers, the transcript then shows up in the app, where users can then generate a summary based on it. We gave them a handful of summary types to choose from: ‘Summary (paragraph)’, ‘Summary (bullet points)’, and ‘Actionables’.

They only had to select the type, and our LLM service would generate a summary based on the transcript and our hardcoded prompt, with section headers automatically generated based on the flow of the conversation.

When we tested this with the MSWs in role-play conversations, they loved it. They understood the interface right away and were impressed by the quality of the summaries, with one remarking that it was “quite close to what [they] would write”. They were surprised by what it could come up with and that the generations were quite variable (i.e. the output generated would be slightly different every time), but were pleased that Scribe provided a comprehensive summary that they could quickly refine according to their needs.

With a workable MVP, we piloted it at 1 hospital in April 2024. We did a live demo with MSWs as participants (always a risky endeavour!), but it went well and the MSWs were excited to use it with real patients. In that first week of launch, we had 3 conversations Scribed. In the second week, it was 11. But in the third week, it was 0 and it stayed there for subsequent weeks.

When we realised our approach wasn’t working, we sat down with our pilot users to figure out what went wrong. After all, we had done plenty of role-play conversations and MVP testing before launching it. What was different?

It turned out our users hated not knowing what they were going to get from Scribe. Even before they start writing a case note, experienced MSWs already have a structure in mind for that note, down to the specific sections they would want to include.

What we thought was clever, i.e. auto-generated section headers based on the flow of the conversation, just created a lot more work for the MSWs as they had to rewrite and reorder the content to fit the order they had in mind. It took more time to read and rewrite the generated summary than to write it from scratch.

Clearly, Scribe wasn’t solving the problem they had. And users only realised it wasn’t what they wanted only after trying it with real patients.

Do things that don’t scale

To figure out what to do next, we sat down with MSWs and their transcripts and compared Scribe’s summaries with their submitted case notes. The differences were stark.

We tried prompt engineering on the fly to see if we could better match the MSWs’ expected note structure. For each transcript that came in, we would iterate on the prompt in front of the MSW to see if we could get an output that better matched their expectations.

Of course, this manual prompt engineering would not scale in practice and we ran the risk of over-optimising for one MSW’s style or use case. But we learned a tremendous amount from doing it and understood the problem more deeply as a result.

We realised we had to give users some level of control over what gets generated. Our users are opinionated and already know what they want from Scribe. They just need it to adapt to their diverse documentation needs and writing styles.

At the same time, we were wary of the other extreme: giving MSWs the whole prompt to configure. The average user is not a prompt engineer.

We settled on a middle ground. We ask MSWs for just 3 things when generating a summary: (1) a description of who’s involved in the conversation, (2) the list of sections they want in the summary, and (3) their preferred writing style.

Seeing Trying is believing

Scribe is a unique product in OGP in that it’s a very individual tool.

Our other platform products, such as FormSG (basically, Google Forms for government) and Isomer (a website builder for government), really just need 1 enterprising user to set up a form/website for thousands to benefit from it.

Scribe, on the other hand, is just for you. How much you use it is really up to you and whether you are convinced of its value.

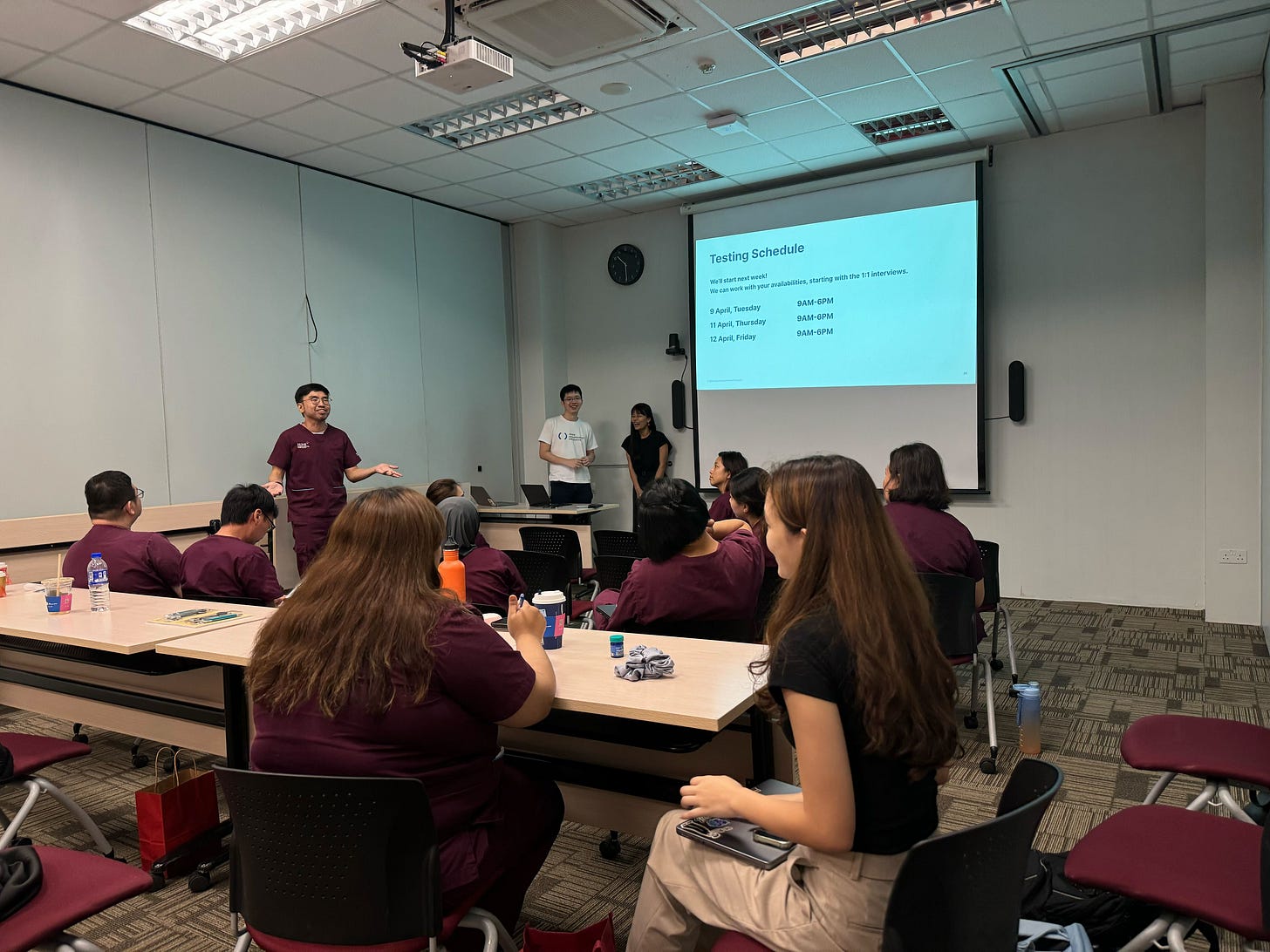

After we had tested our new summary generation interface, we started visiting the hospitals one by one to launch it with the MSWs. While taxing on us, these sessions were useful for generating a lot of buzz and excitement about the tool.

We tried a few different approaches in these launches to get user buy-in. In some, we shared real-life examples of Scribe generating impressive summaries of difficult conversations. In others, we got them to pair up with each other and role-play a conversation for Scribe. They would then generate a summary of the conversation they just had.

What we found worked best in generating sustained interest was having them try it for themselves. The institutions that had time to play with it during our 1-hour launch session with them were the most enthusiastic about it and more readily adopted it into their workflows.

Part of it was technical — there were mic / audio permissions issues that we could troubleshoot in person as they came up, so MSWs never got stuck trying to use the app.

But the bigger factor was psychological — a hands-on session forced them to get over the inertia of trying something new. And when they saw it could capture the local Singaporean accent, our multilingual speech, and the Singlish lah’s and leh’s that we sprinkle in our conversations, they were uniformly impressed and wanted to see for themselves if it would work well with actual patients.

What’s next for Scribe?

We have been pleasantly surprised by the positive reception to Scribe. While we knew it would be useful, even we were surprised by the (sometimes unprompted) feedback that came our way.

One MSW told us that, thanks to Scribe, they had time to walk their dog before the sun set. A senior MSW shared that Scribe was not just a time-saver, but an equaliser: “Some of my MSWs have big hearts but can’t write well. Scribe levels the playing field.” Many also shared that because they could trust Scribe to take notes for them, they were able to more fully engage with their patients during conversations.

Scribe’s mission is to reduce documentation time so that users can focus on higher-value work. Having launched to the MSWs successfully, we are looking to launch Scribe to other user groups with similarly heavy documentation workloads.

We are already in the midst of expanding Scribe to the social workers in our social service agencies, as well as to the other healthcare professions in our hospitals. We would love to hear from you if you are from a Singapore agency / organisation that is serving the public and are interested in using Scribe!

Scribe was built by a multidisciplinary team of engineers, designers, and product ops. Shoutout to the rest of the team: Qimmy, Latasha, Natasha, Wan Ling!

What are your thoughts on Scribe and our journey? Share with us your comments below!